Our PhD Researcher, Filip Wichrowski, took part in the O’Bayes 2025 in Athens — the cradle of European culture — from June 8 to 12, 2025 and revolved around bayesian methods in statistics. All talks were held at the Stavros Niarchos Foundation Cultural Center, a cultural and educational complex known for its stunning contemporary architecture that harmoniously blends into its green surroundings. The conference hosted prominent speakers from around the world, well-known and respected in the Bayesian community, who presented their most recent and relevant achievements. Each presentation was followed by a structured discussion, including commentary from a designated discussant and an open floor for further exchange. For those less familiar with Bayesian methods, the tutorials offered a valuable opportunity to build foundational insights.

During the conference, Filip had the opportunity to meet many young researchers from around the world, exchange ideas and perspectives, and gain inspiration for my own research. The conference concluded with a gala dinner at a beautifully located seaside restaurant, offering excellent food. Overall, the event was an enriching and motivating experience, both scientifically and socially.

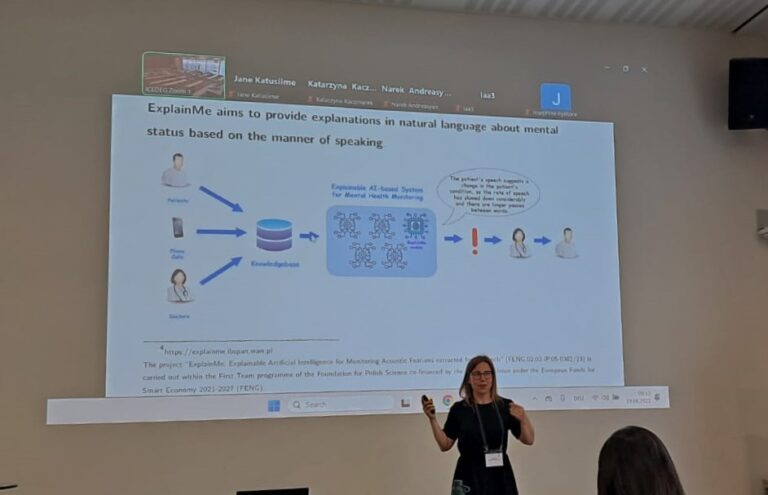

Filip presented a poster about “Prior-informed soft label propagation for partially supervised hidden Markov models“. Hidden Markov Models (HMMs) are widely used latent-variable models that provide flexible approaches to learning from sequential data. However, standard HMM training typically relies on either fully supervised or fully unsupervised methods, both of which can be suboptimal in many real-world settings where data labeling is often expensive or imperfect. A solution proposed by [1] integrates partial supervision into HMMs by modifying the forward–backward recursions, effectively constraining certain paths in the state lattice when partial labels are known. While this approach elegantly handles hard labeling constraints, it does not extend naturally to scenarios with soft or uncertain labels because the forward–backward definition must remain consistent across all time steps. In this work, we propose a novel semi-supervised HMM training strategy that relocates the burden of partial labeling from the forward–backward variables to emission probabilities that are scaled according to a proposed weight matrix, based on prior beliefs. By incorporating partial supervision directly into the emission densities, we preserve the standard HMM recursion while leveraging soft labeling information as a prior distribution. We propose a method for label propagation that extends a known label to its neighbourhood by convolving an indicator function with a Gaussian kernel. Finally, we demonstrate the effectiveness of the proposed approach through a brief experiment on simulated data. Our method naturally accommodates cases in which labels are not entirely reliable: whether due to subjective human annotation or automated labeling processes prone to error.

Here you can find Filip’s poster: